Functions/Subroutines | |

| subroutine | find_optpar (par, parmin, parmax, parprecision, npar) |

| subroutine, public | set_optpar_index () |

| subroutine, public | set_optim_modpar (npar, dpar, par) |

| subroutine | run_model_crit (npar, mpar, par, frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate, criterion, status) |

| subroutine | run_model_perf (npar, mpar, par, frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate, criterion, performance, status, condcrit, condthres) |

| subroutine | run_model_simout (npar, mpar, par, iens, runens, allens, frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate, criterion, performance, status, condcrit, condthres) |

| subroutine, public | demc_simulation (dir, writeall, frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate, npar) |

| subroutine | demc_runmedianparameters (npop, npar, numoptimpar, iens, frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate, optcrit, performance, condcrit, condthres) |

| subroutine | demc_draw_r1r2 (jpop, npop, R1, R2) |

| subroutine | demc_proposalgeneration (jpop, R1, R2, gamma, sigma, npar, parprop, parprec) |

| subroutine | demc_crossover (jpop, npar, crossover, parprop) |

| subroutine | demc_controlparameters (npar, parprop, parmin, parmax) |

| subroutine | demc_acceptreject_proposal (npar, par, crit, numcrit, performance, jpop, iacc, condcr, condth) |

| subroutine | write_demc_calibration_log (genCounter, popCounter) |

| subroutine | write_demc_calibration_log_tail (funit) |

| subroutine, public | montecarlo_simulation (dir, writeall, frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate, npar) |

| subroutine, public | bounded_montecarlo_simulation (taskMC, frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate, npar) |

| subroutine | get_randompar (mpar, parmin, parmax, npar, par) |

| subroutine | reduce_parameter_space (mpar, parmin, parmax, npar) |

| subroutine | bookkeep_result_from_simulation (npar, mpar, nbest, par, crit, numcrit, performance) |

| subroutine, public | param_scanning (frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate) |

| subroutine, public | stage_montecarlo (frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate) |

| subroutine | stage_montecarlo_core_function (writeall, icenter, istage, nruns, parCenter, parRadStage, parMin, parMax, frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate) |

| subroutine | get_randompar_by_radius (parCenter, parRadStage, parMin, parMax, par) |

| subroutine | write_stagemc_calibration_log (runCounter, centerCounter, stageCounter) |

| subroutine | write_stagemc_calibration_log_tail (funit) |

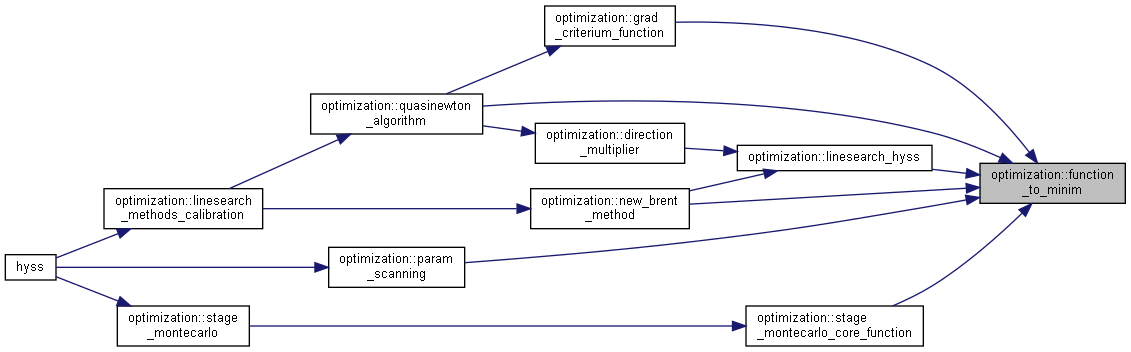

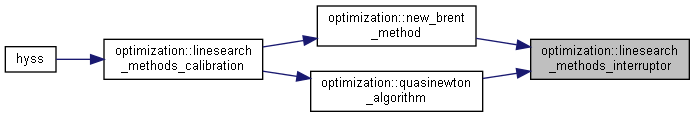

| subroutine, public | linesearch_methods_calibration (npar, frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate, par) |

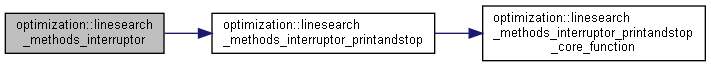

| subroutine | linesearch_methods_interruptor (iterCounter, critLast, critLastVect, parLast, parLastTable, parPrecision, finished) |

| subroutine | linesearch_methods_interruptor_printandstop (stopFlag, finished) |

| subroutine | linesearch_methods_interruptor_printandstop_core_function (stopFlag, fileunit) |

| subroutine | linesearch_hyss (xMinIn, xMaxIn, refPar, parPrecis, direction, frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate, xBest, fBest, xGood, fGood) |

| subroutine | linesearch_check_decim (intLength, parPrecis, direction, stopFlag) |

| subroutine | function_to_minim (lambda, flambda, refPar, direction, frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate) |

| subroutine | new_brent_method (critLastVect, parLastTable, frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate) |

| subroutine | initialize_brent_calibrationlog () |

| subroutine | write_brent_calibrationlog (par, parStep, direction, newPar, lambdaBest, critBest) |

| subroutine | quasinewton_algorithm (critLastVect, parLastTable, frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate) |

| subroutine | load_qnstarting_vector (parMin, parMax, par) |

| subroutine | grad_criterium_function (par, parMin, parMax, frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate, gradVect, gradNorm) |

| subroutine | direction_multiplier (par, parMin, parMax, parPrecis, direction, frozenstate, soilstate, aquiferstate, riverstate, lakestate, miscstate, lambdaBest, critBest, prevCritBest) |

| subroutine | get_epsilon (par, parMin, parMax, dimCounter, epsilAbs, epsilRel, epsilVal) |

| subroutine | qn_inv_hessian_update (deltaVector, deltaGrad, invHessian, invHessianNew, finished) |

| subroutine | dfp_inv_hessian_update (deltaGrad, invHessian, DFPmatrix) |

| subroutine | bfgs_inv_hessian_update (deltaVector, deltaGrad, invHessian, denom, BFGSmatrix) |

| subroutine | print_calib_hysslog (iterCounter, param, critVal, gradNorm) |

| subroutine | print_calib_hysslog_noimp (iterCounter, param, critVal) |

| subroutine | initialize_qn_calibration_log (par, gradVect, gradNorm, invHessian) |

| subroutine | write_qn_calibration_log (par, gradVect, gradNorm, invHessian, direction, lambdaBest, critBest, iteratCounter) |

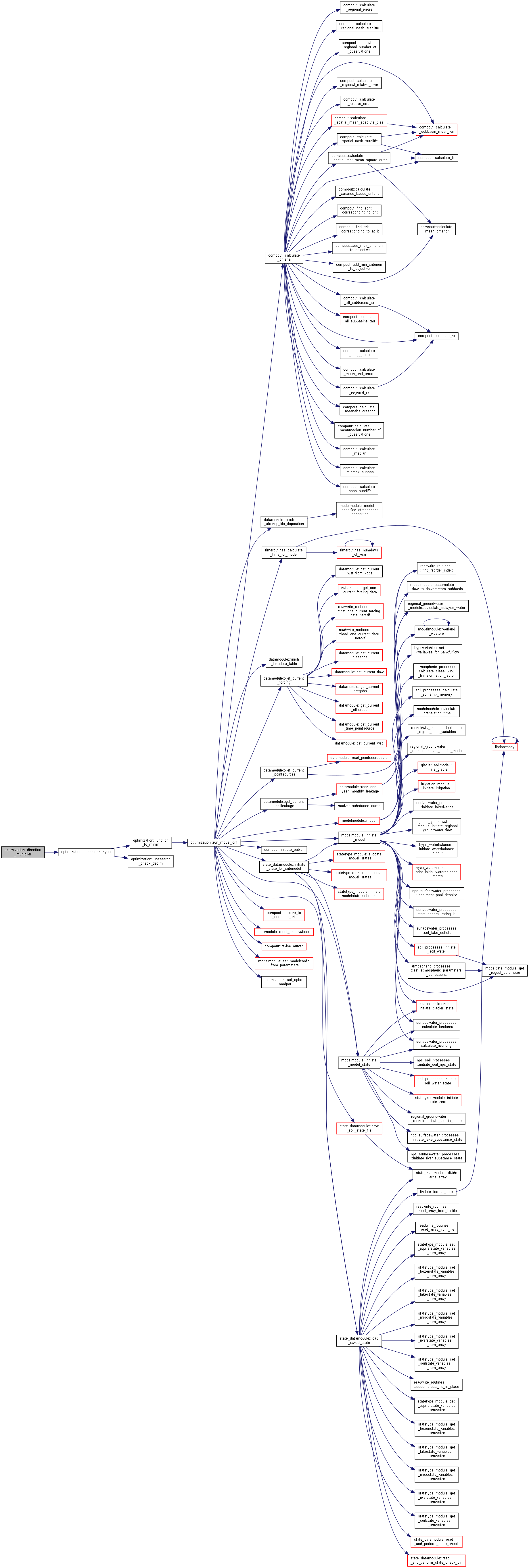

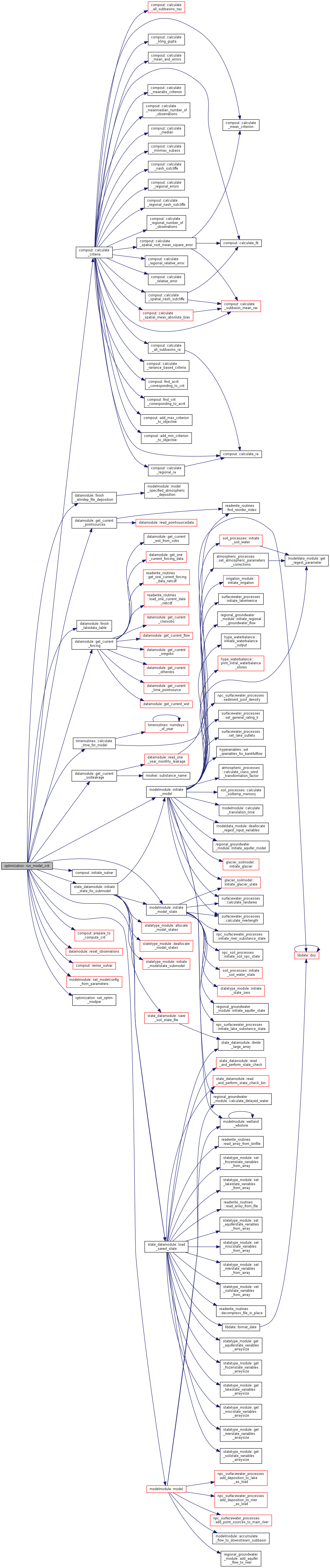

Detailed Description

Global optimization procedures for calibration of HYSS.

Function/Subroutine Documentation

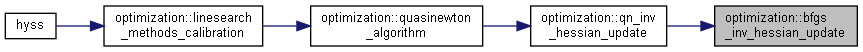

◆ bfgs_inv_hessian_update()

| subroutine optimization::bfgs_inv_hessian_update | ( | real, dimension(numoptimpar), intent(in) | deltaVector, |

| real, dimension(numoptimpar), intent(in) | deltaGrad, | ||

| real, dimension(numoptimpar, numoptimpar), intent(in) | invHessian, | ||

| real, intent(in) | denom, | ||

| real, dimension(numoptimpar, numoptimpar), intent(out) | BFGSmatrix | ||

| ) |

This routine computes the last term (BFGSmatrix)

BFGS: H_{k+1} = (s_k * s_k^T)/(y^T * s_k) + [I - (s_k * y_k^T)/(y_k^T * s_k)] * H_k * [I - (y_k * s_k^T)/(y_k^T * s_k)] Eq. (6.17) p. 140 in "Numerical optimization" (second edition), J. Nocedal, S.J. Wright

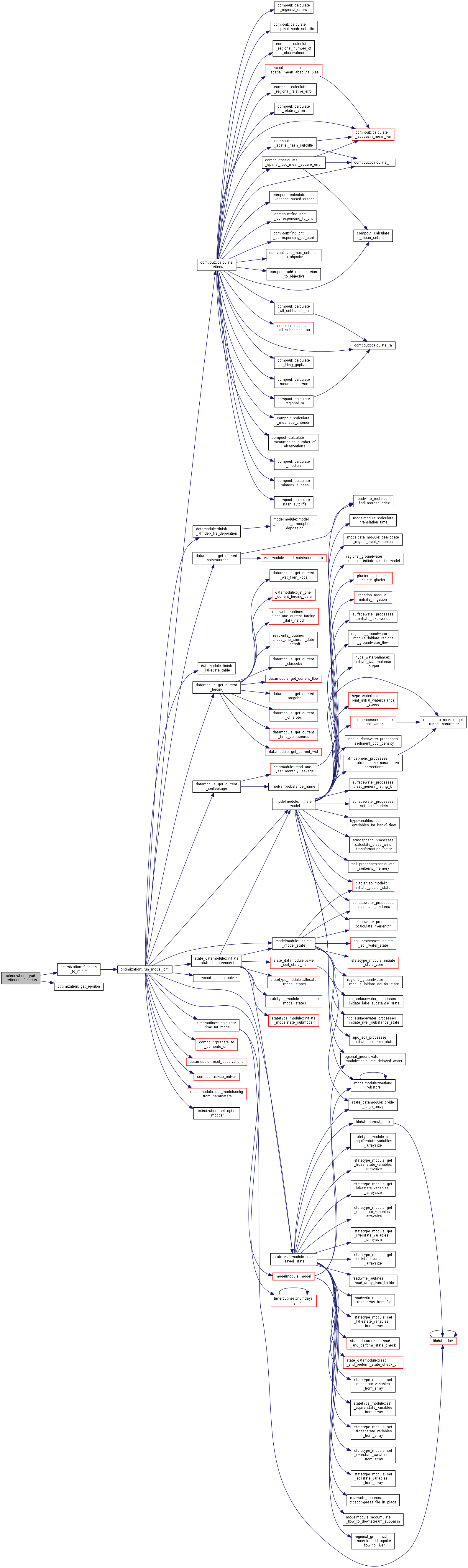

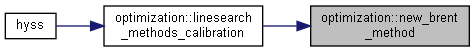

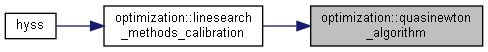

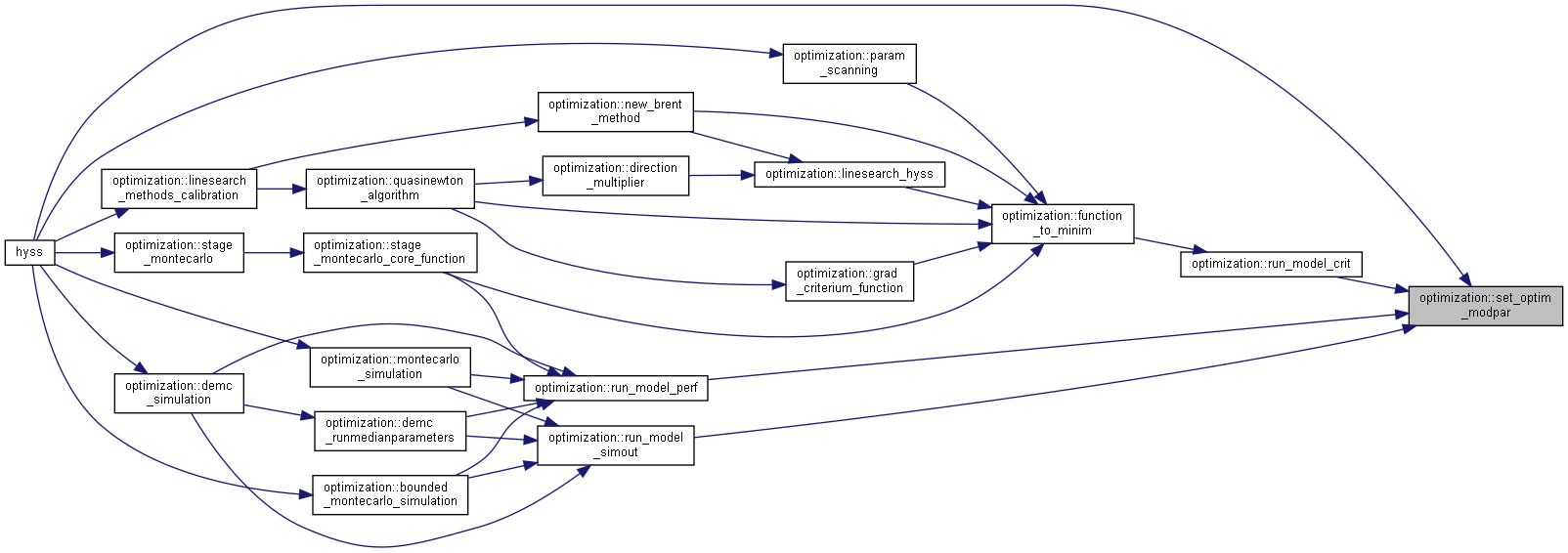

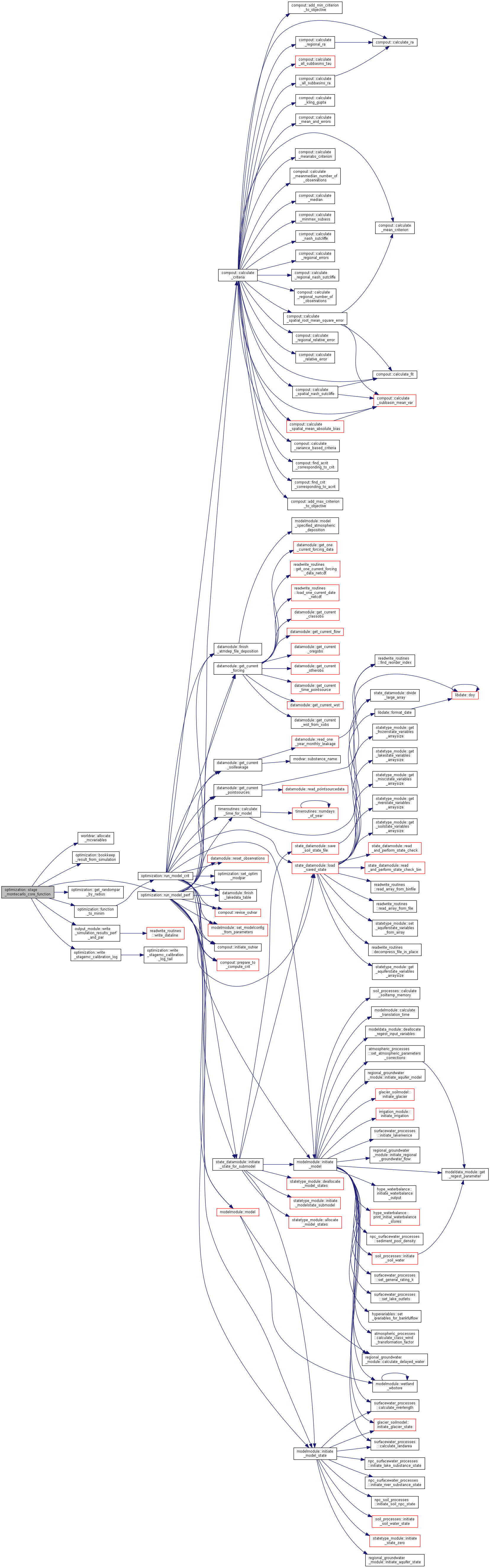

Here is the caller graph for this function:

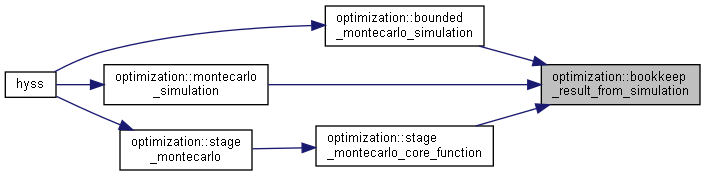

Here is the caller graph for this function:◆ bookkeep_result_from_simulation()

| subroutine optimization::bookkeep_result_from_simulation | ( | integer, intent(in) | npar, |

| integer, intent(in) | mpar, | ||

| integer, intent(in) | nbest, | ||

| real, dimension(mpar), intent(in) | par, | ||

| real, intent(in) | crit, | ||

| integer, intent(in) | numcrit, | ||

| real, dimension(maxperf,numcrit), intent(in) | performance | ||

| ) |

Save the results from the simulation for later use. Save the sofar nbest best and all values.

- Parameters

-

[in] npar Actual number of parameters [in] mpar Dimension of parameter-array [in] nbest Number of ensembles saved as best [in] par Current parameter values [in] crit Current optimization criterion value [in] numcrit Number of variables that performance criteria are calculated for [in] performance Performance criteria

Here is the caller graph for this function:

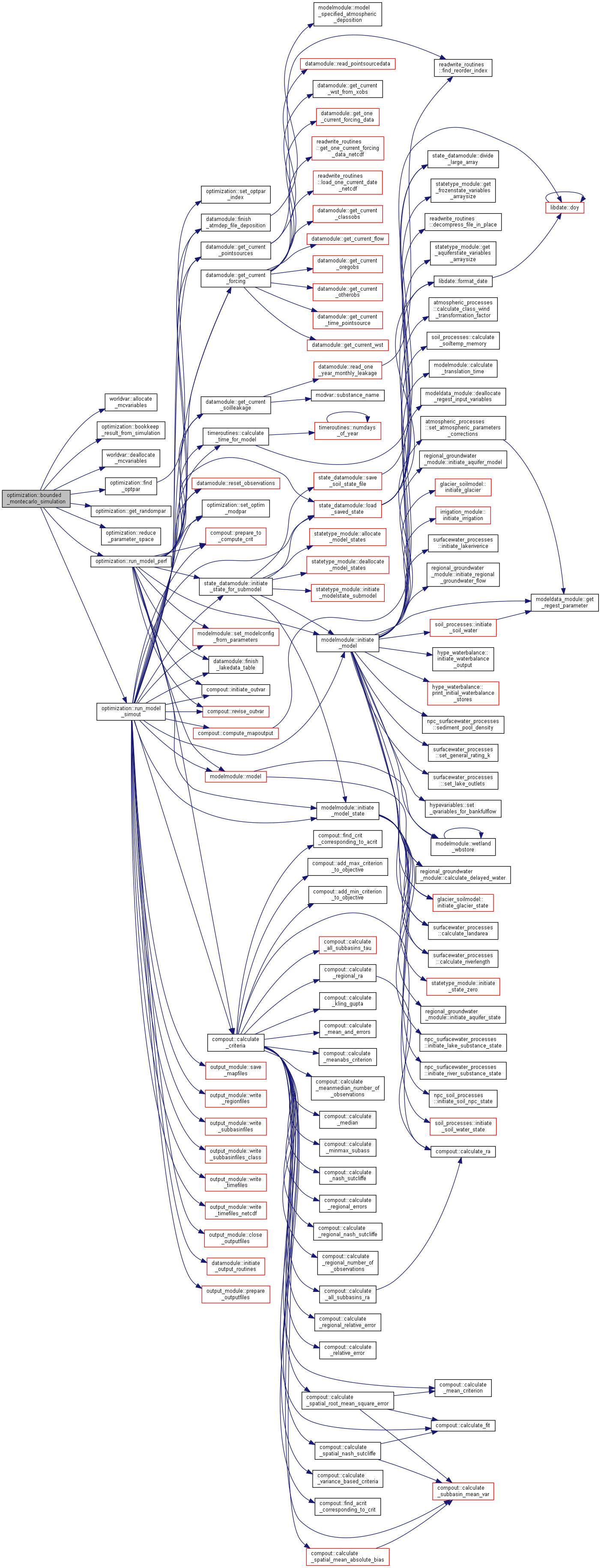

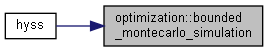

Here is the caller graph for this function:◆ bounded_montecarlo_simulation()

| subroutine, public optimization::bounded_montecarlo_simulation | ( | logical, intent(in) | taskMC, |

| type(snowicestatetype), intent(inout) | frozenstate, | ||

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate, | ||

| integer, intent(out) | npar | ||

| ) |

Successive MonteCarlo simulation with reduced parameter space.

- Parameters

-

[in] taskmc Flag for MonteCarlo simulation done [in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states [out] npar Number of parameters to be changed

Algorithm

Initiate MonteCarlo simulation; find parameters and intervals for them to use

Run initial MonteCarlo simulation; num_bpmc simulations, generating new random parameter values for each simulation

Meanwhile keep track of the num_ens best simulations for next stage

Start MonteCarlo simulation with reducing bounds successively; for each repetition (of num_bpmax)...

- Reducing the parameter space to contain the best simulations only

- Run a MonteCarlo simulation; num_bpmc simulations, generating new random parameter values for each simulation

- Meanwhile keep track of the num_ens best simulations for next stage

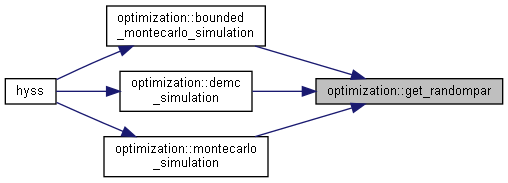

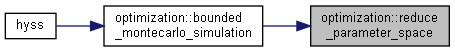

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ demc_acceptreject_proposal()

|

private |

- Parameters

-

[in] npar Actual number of parameters [in] par Current parameter values [in] crit Current criterium value [in] numcrit Number of variables that criteria are calculated for [in] performance Other performance criteria [in] jpop Index of actual population [in,out] iacc Acceptance index (0)rejected (1)accepted [in] condcr Conditional criteria [in] condth Conditional acceptance threshold

Here is the caller graph for this function:

Here is the caller graph for this function:◆ demc_controlparameters()

| subroutine optimization::demc_controlparameters | ( | integer, intent(in) | npar, |

| real, dimension(npar), intent(inout) | parprop, | ||

| real, dimension(npar), intent(in) | parmin, | ||

| real, dimension(npar), intent(in) | parmax | ||

| ) |

Control parameter limits, and reflect proposal into the range if outside.

If still outside, make a relative reflection.

- Parameters

-

[in] npar Number of calibration parameters [in,out] parprop Proposal parameter vector [in] parmin Parameter interval lower limit [in] parmax Parameter interval upper limit

Here is the caller graph for this function:

Here is the caller graph for this function:◆ demc_crossover()

| subroutine optimization::demc_crossover | ( | integer, intent(in) | jpop, |

| integer, intent(in) | npar, | ||

| real, intent(in) | crossover, | ||

| real, dimension(npar), intent(inout) | parprop | ||

| ) |

With probability of crossover less than one, some parameter values are kept from the previous generation.

- Parameters

-

[in] jpop index of current population [in] npar Number of calibration paramters [in] crossover Probability of mutation cross over to child [in,out] parprop Proposal parameter vector

Here is the caller graph for this function:

Here is the caller graph for this function:◆ demc_draw_r1r2()

| subroutine optimization::demc_draw_r1r2 | ( | integer, intent(in) | jpop, |

| integer, intent(in) | npop, | ||

| integer, intent(out) | R1, | ||

| integer, intent(out) | R2 | ||

| ) |

Random mutation population R1 and R2; without replacement excluding j.

- Parameters

-

[in] jpop index of current population [in] npop number of population [out] r1 index of randomly selected population 1 [out] r2 index of randomly selected population 2

Algorithm

Draw a random number R1 and scale it to an integer (1:npop-1)

Draw second random number R2 until R1 not equal to R2

Re-scale random numbers to integer scale (1:npop) excluding j

Here is the caller graph for this function:

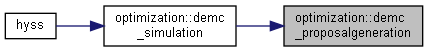

Here is the caller graph for this function:◆ demc_proposalgeneration()

|

private |

Proposal parameter vector, by mutation of R1, R2 and j.

- Parameters

-

[in] jpop index of current population [in] r1 index of randomly selected population 1 [in] r2 index of randomly selected population 2 [in] npar Number of calibration parameters [in] gamma mutation scaling factor [in] sigma standard deviation of sampling error [in,out] parprop Proposal parameter vector [in,out] parprec Precision sought for parameters

Here is the caller graph for this function:

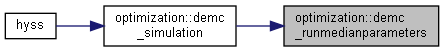

Here is the caller graph for this function:◆ demc_runmedianparameters()

| subroutine optimization::demc_runmedianparameters | ( | integer, intent(in) | npop, |

| integer, intent(in) | npar, | ||

| integer, intent(in) | numoptimpar, | ||

| integer, intent(in) | iens, | ||

| type(snowicestatetype), intent(inout) | frozenstate, | ||

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate, | ||

| real, intent(inout) | optcrit, | ||

| real, dimension(maxperf,nacrit), intent(inout) | performance, | ||

| real, intent(inout) | condcrit, | ||

| real, intent(inout) | condthres | ||

| ) |

- Parameters

-

[in] npop Number of populations [in] npar Number of parameters to be changed [in] numoptimpar Effective amount of optimization parameters [in] iens Current simulation [in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states [in,out] optcrit Optimization criterion [in,out] performance Simulation performance criteria [in,out] condcrit Conditional criterion [in,out] condthres Threshold for conditional criterion

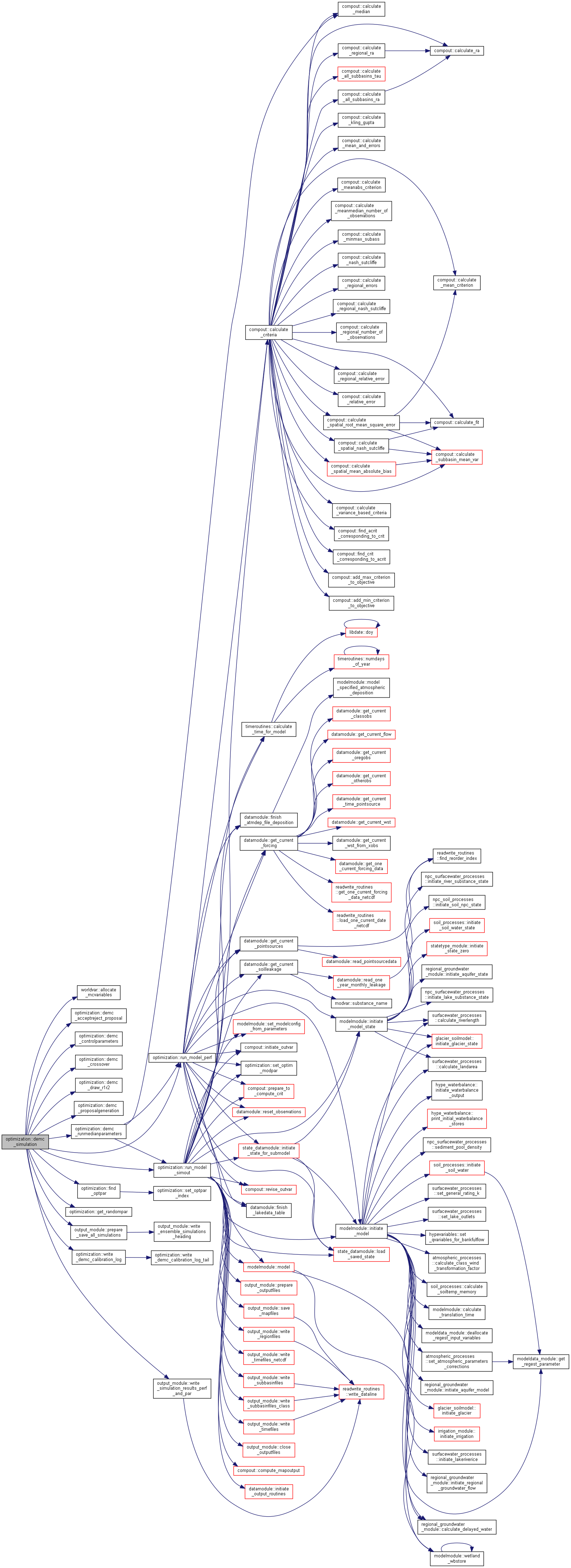

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ demc_simulation()

| subroutine, public optimization::demc_simulation | ( | character(len=*), intent(in) | dir, |

| logical, intent(in) | writeall, | ||

| type(snowicestatetype), intent(inout) | frozenstate, | ||

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate, | ||

| integer, intent(out) | npar | ||

| ) |

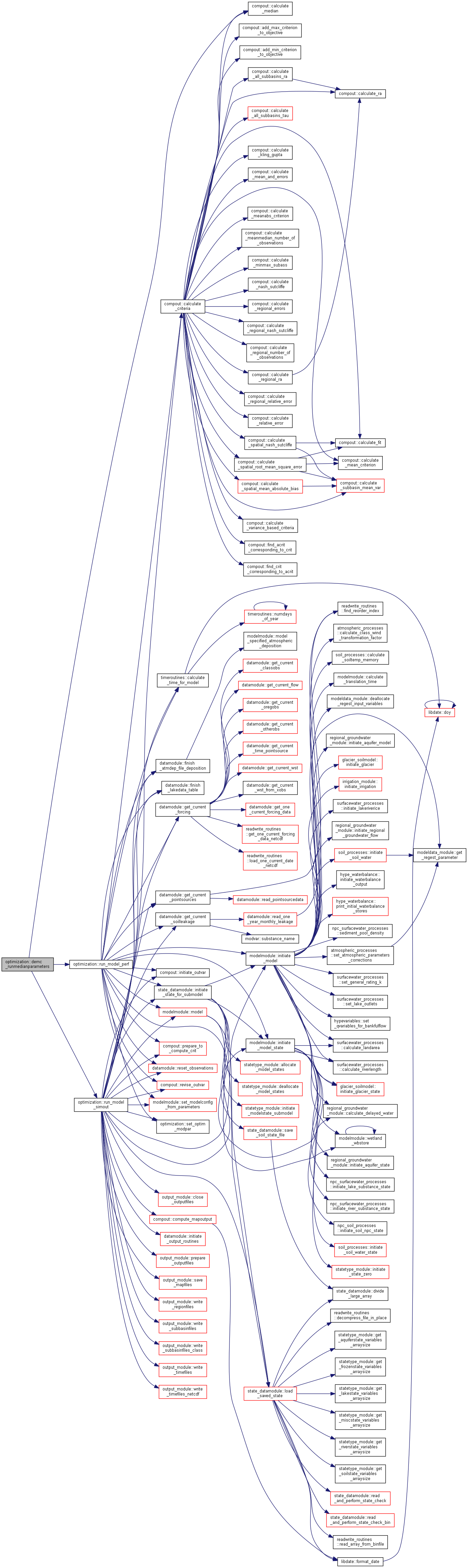

Perform a DE-MC simulation.

Differential Evolution-Markov Chain (DEMC) introduces uncertainty estimate in the DE optimization algorithm by applying the Metropolis- Hastings acceptance criteria, and by adding a random number in the DE proposal generation. Another way of describing DEMC is that it is a simplified version of MCMC, where the DE proposal generation is used instead of the otherwise cumbersome MCMC jumps based on covariance and multivariate normal distributions. The genetic DE algorithm overcomes the difficult jump generation by generating the proposal directly from the current populations, instead of having to tune the covariance matrix and all that stuff. The advantage of DEMC versus plain DE is both the possibility to get a probability based uncertainty estimate and a better convergence towards the global optima.

References:

Cajo J. F. Ter Braak (2006). A Markov Chain Monte Carlo version of the genetic algorithm Differential Evolution: easy Bayesian computing for real parameter spaces. Stat Comput, 16:239-249, DOI 10.1007/s11222-006-8769-1

Ronkkonen et al. (2009).

- Parameters

-

[in] dir File directory [in] writeall Write result to file [in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states [out] npar Number of parameters to be changed

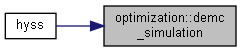

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ dfp_inv_hessian_update()

| subroutine optimization::dfp_inv_hessian_update | ( | real, dimension(numoptimpar), intent(in) | deltaGrad, |

| real, dimension(numoptimpar, numoptimpar), intent(in) | invHessian, | ||

| real, dimension(numoptimpar, numoptimpar), intent(out) | DFPmatrix | ||

| ) |

This routine computes the last term (DFPmatrix) DFP: H_{k+1} = H_k + [s_k * s_k^T]/[y^T * s_k] - [H_k * y_k * y_k^T * H_k]/[y_k^T * H_k * y_k] Eq. (6.15) p. 139 in "Numerical optimization" (second edition), J. Nocedal, S.J. Wright.

- Parameters

-

[in] deltagrad y_k, difference in gradient at step k [in] invhessian H_k, inverse Hessian at step k [out] dfpmatrix Last term of the DFP upgrade formula

Here is the caller graph for this function:

Here is the caller graph for this function:◆ direction_multiplier()

| subroutine optimization::direction_multiplier | ( | real, dimension(numoptimpar), intent(in) | par, |

| real, dimension(numoptimpar), intent(in) | parMin, | ||

| real, dimension(numoptimpar), intent(in) | parMax, | ||

| real, dimension(numoptimpar), intent(in) | parPrecis, | ||

| real, dimension(numoptimpar), intent(in) | direction, | ||

| type(snowicestatetype), intent(inout) | frozenstate, | ||

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate, | ||

| real, intent(out) | lambdaBest, | ||

| real, intent(out) | critBest, | ||

| real, intent(in) | prevCritBest | ||

| ) |

Determines "lambda", the fraction of direction step to take, by linear search of minimum.

- Parameters

-

[in] par Current algorithm position in parameter space [in] parmin Lower interval limits, from optpar.txt, these limits are absolute, serve as permanent/reference bounds [in] parmax Upper interval limits, from optpar.txt, these limits are absolute, serve as permanent/reference bounds [in] parprecis Precision, from optpar.txt [in] direction Vectorial direction between current and new best vector [in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states [out] lambdabest Best step in direction to take [out] critbest Criterion of best step

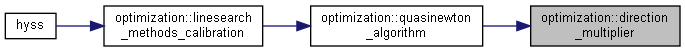

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ find_optpar()

|

private |

Find the model parameters to be optimized and set the parameter information variables to be used in optimization.

Consequences Module worldvar variable parindex is set

- Parameters

-

[out] par Current parameter values for calibrated parameter [out] parmin Lower parameter limit for calibration [out] parmax Upper parameter limit for calibration [out] parprecision Decimal precision up to which parameter is to be calibrated [out] npar Actual number of parameters to be looped, = numoptimpar

Algorithm

Allocate and set WORLDVAR parindex

Find the starting parameter values; geometric or aritmetric mean

Find the information on parameters to be calibrated

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

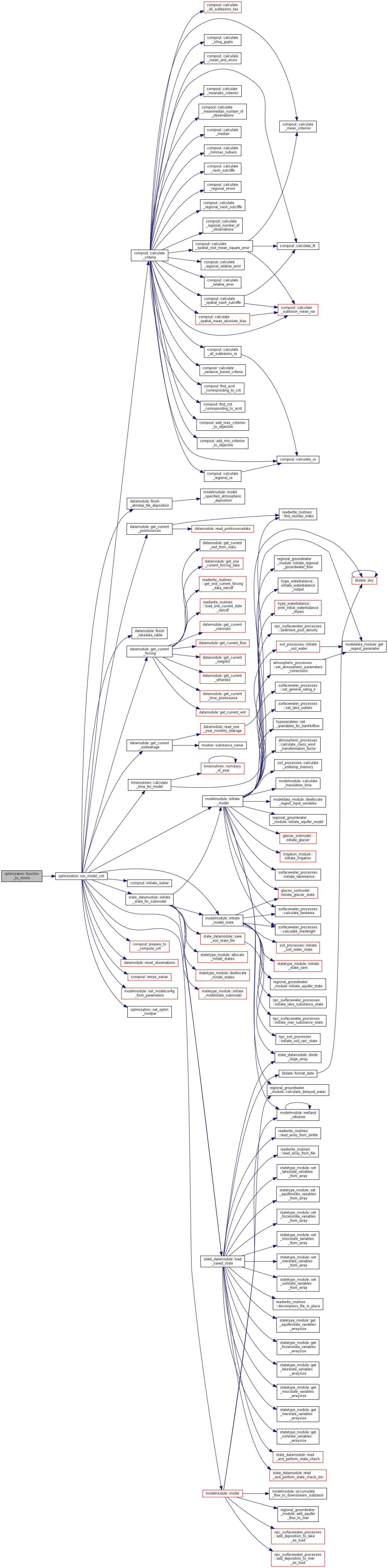

Here is the caller graph for this function:◆ function_to_minim()

| subroutine optimization::function_to_minim | ( | real, intent(in) | lambda, |

| real, intent(out) | flambda, | ||

| real, dimension(numoptimpar), intent(in) | refPar, | ||

| real, dimension(numoptimpar), intent(in) | direction, | ||

| type(snowicestatetype), intent(inout) | frozenstate, | ||

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate | ||

| ) |

Choose objective function, HYSS model or one of the simple debug functions.

- Parameters

-

[in] lambda length of current step [out] flambda optimization criteria value [in] refpar reference parameter values [in] direction current direction of step [in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake state [in,out] miscstate Misc states

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

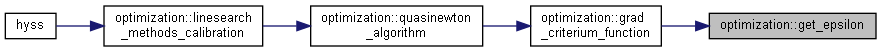

Here is the caller graph for this function:◆ get_epsilon()

| subroutine optimization::get_epsilon | ( | real, dimension(numoptimpar), intent(in) | par, |

| real, dimension(numoptimpar), intent(in) | parMin, | ||

| real, dimension(numoptimpar), intent(in) | parMax, | ||

| integer, intent(in) | dimCounter, | ||

| real, intent(out) | epsilAbs, | ||

| real, intent(out) | epsilRel, | ||

| real, intent(out) | epsilVal | ||

| ) |

Determines "epsilon", the offset from parameter value used for numerical gradient calculation (central differences)

Note: in this numerical scheme, central differences are implemented so that epsilon MUST have the same sign as the parameter value

- Parameters

-

[in] par Current algorithm position in parameter space [in] parmin Parameter interval lower limit [in] parmax Parameter interval upper limit

Here is the caller graph for this function:

Here is the caller graph for this function:◆ get_randompar()

| subroutine optimization::get_randompar | ( | integer, intent(in) | mpar, |

| real, dimension(mpar), intent(in) | parmin, | ||

| real, dimension(mpar), intent(in) | parmax, | ||

| integer, intent(in) | npar, | ||

| real, dimension(mpar), intent(out) | par | ||

| ) |

Get new random values of parameters to be optimized from a uniform distribution between parmin and parmax.

- Parameters

-

[in] mpar Dimension of argument parameters [in] parmin Parameter interval lower limit [in] parmax Parameter interval upper limit [in] npar Actual number of parameters to get random number [out] par Current parameter values

Here is the caller graph for this function:

Here is the caller graph for this function:◆ get_randompar_by_radius()

| subroutine optimization::get_randompar_by_radius | ( | real, dimension(numoptimpar), intent(in) | parCenter, |

| real, dimension(numoptimpar), intent(in) | parRadStage, | ||

| real, dimension(numoptimpar), intent(in) | parMin, | ||

| real, dimension(numoptimpar), intent(in) | parMax, | ||

| real, dimension(numoptimpar), intent(out) | par | ||

| ) |

Get random values for parameters to be optimized.

Parameter space delimitation achieved by specifying center and radius: parCenter, parRadStage (vectors, all parameter dimensions at once)

- Parameters

-

[in] parcenter Parameter space center for current stage [in] parradstage Array with parameter space radii for current stage [in] parmin Parameter interval lower limit (reference bounds, do not touch!!) [in] parmax Parameter interval upper limit (reference bounds, do not touch!!) [out] par Current parameter values, to be send to model

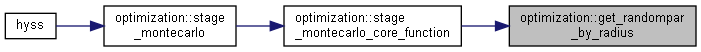

Here is the caller graph for this function:

Here is the caller graph for this function:◆ grad_criterium_function()

| subroutine optimization::grad_criterium_function | ( | real, dimension(numoptimpar), intent(in) | par, |

| real, dimension(numoptimpar), intent(in) | parMin, | ||

| real, dimension(numoptimpar), intent(in) | parMax, | ||

| type(snowicestatetype), intent(inout) | frozenstate, | ||

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate, | ||

| real, dimension(numoptimpar), intent(out) | gradVect, | ||

| real, intent(out) | gradNorm | ||

| ) |

Computes the numerical gradient of the gradient function, in all dimensions.

- Parameters

-

[in] par Current best parameter set = algorithm current position in parameter space; to be tested for gradient [in] parmin Parameter interval lower limit [in] parmax Parameter interval upper limit [in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states [out] gradvect gradient [out] gradnorm gradient norm

Here is the call graph for this function:

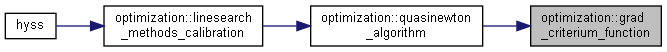

Here is the call graph for this function: Here is the caller graph for this function:

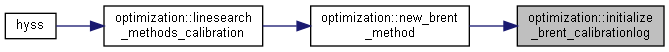

Here is the caller graph for this function:◆ initialize_brent_calibrationlog()

| subroutine optimization::initialize_brent_calibrationlog |

Here is the caller graph for this function:

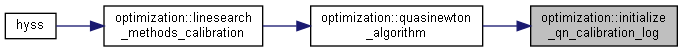

Here is the caller graph for this function:◆ initialize_qn_calibration_log()

| subroutine optimization::initialize_qn_calibration_log | ( | real, dimension(numoptimpar), intent(in) | par, |

| real, dimension(numoptimpar), intent(in) | gradVect, | ||

| real, intent(in) | gradNorm, | ||

| real, dimension(numoptimpar,numoptimpar), intent(in) | invHessian | ||

| ) |

Here is the caller graph for this function:

Here is the caller graph for this function:◆ linesearch_check_decim()

| subroutine optimization::linesearch_check_decim | ( | real, intent(in) | intLength, |

| real, dimension(numoptimpar), intent(in) | parPrecis, | ||

| real, dimension(numoptimpar), intent(in) | direction, | ||

| logical, intent(out) | stopFlag | ||

| ) |

Check if wanted parameter precision is reached. The line search interval is less than precision for all parameters to be optimized in this line search.

- Parameters

-

[in] intlength Current line search interval length [in] parprecis precision asked for [in] direction current direction of search [out] stopflag flag for line serach interval less than precision asked

Here is the caller graph for this function:

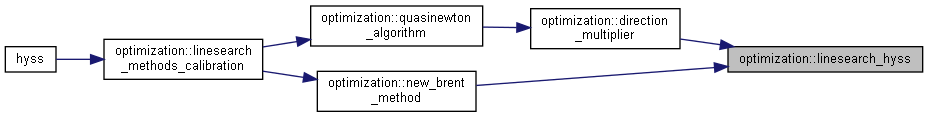

Here is the caller graph for this function:◆ linesearch_hyss()

| subroutine optimization::linesearch_hyss | ( | real, intent(in) | xMinIn, |

| real, intent(in) | xMaxIn, | ||

| real, dimension(numoptimpar), intent(in) | refPar, | ||

| real, dimension(numoptimpar), intent(in) | parPrecis, | ||

| real, dimension(numoptimpar), intent(in) | direction, | ||

| type(snowicestatetype), intent(inout) | frozenstate, | ||

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate, | ||

| real, intent(out) | xBest, | ||

| real, intent(out) | fBest, | ||

| real, intent(in), optional | xGood, | ||

| real, intent(in), optional | fGood | ||

| ) |

Line search for minimum.

- Parameters

-

[in] xminin point search interval minimum [in] xmaxin point search interval maximum [in] refpar parameter values at origin, where x=0 [in] parprecis precision [in] direction direction of line search [in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states [out] xbest best point found [out] fbest function value at best point [in] xgood best previous point [in] fgood function value at best previous point

Algorithm

Initialize the numerical parameters

Compute the starting "golden ratio" point, its related function value, and start the output log

Define tolerances for point and parabola accept/reject tests

Here starts the iterative procedure

- If distance is large enough, do a parabola fit

If no new point was found by parabola fit, find one with golden step method instead

Simulate the model for the new point to calculte the optimization criterion

Update optimal points (theree are saved)

Print iteration results in log

Update tolerances in accordance to new best point

Check if parameter precision is reached

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

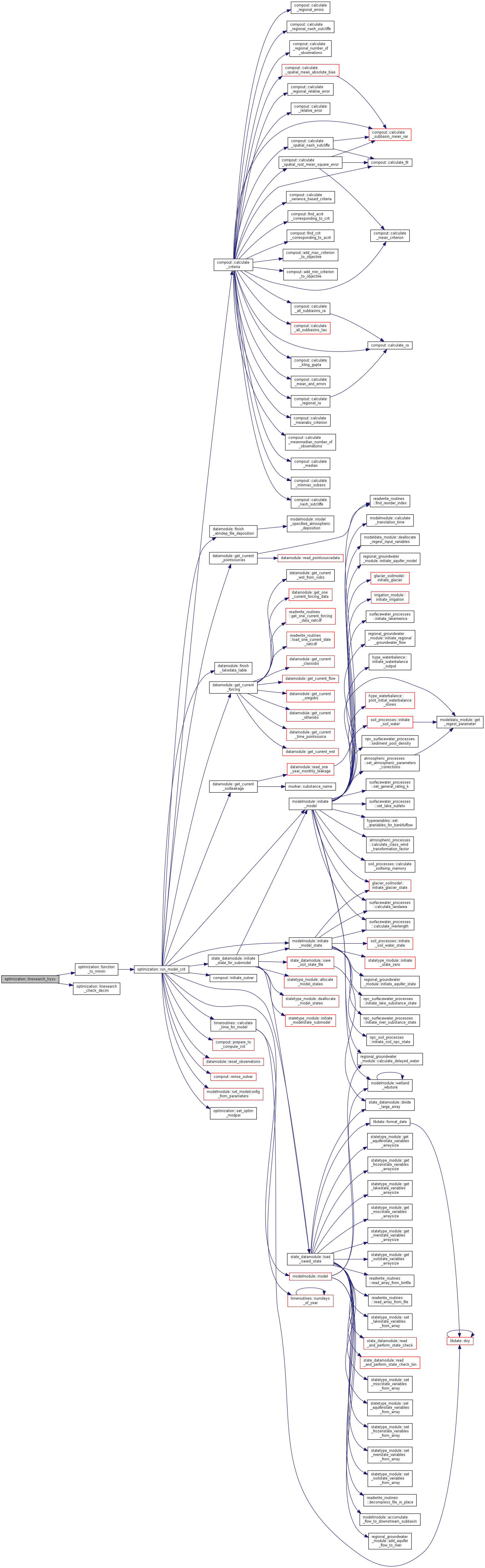

Here is the caller graph for this function:◆ linesearch_methods_calibration()

| subroutine, public optimization::linesearch_methods_calibration | ( | integer, intent(in) | npar, |

| type(snowicestatetype), intent(inout) | frozenstate, | ||

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate, | ||

| real, dimension(npar), intent(out) | par | ||

| ) |

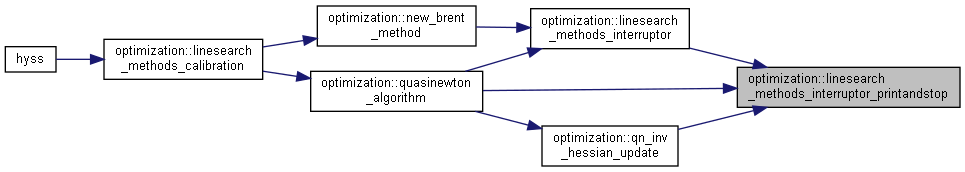

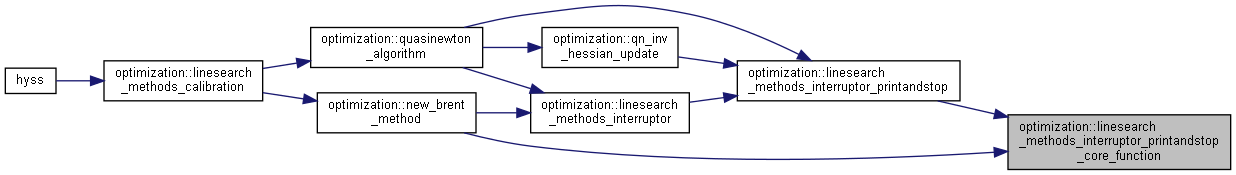

This routine initializes the variables common to all non-MonteCarlo methods (variables used in the interruptor routine in particular, see next), then proceeds to the required optimisation method.

- Parameters

-

[in] npar Number of opt parameters/dimension of par-variable [in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states [out] par Optimised values of parameters

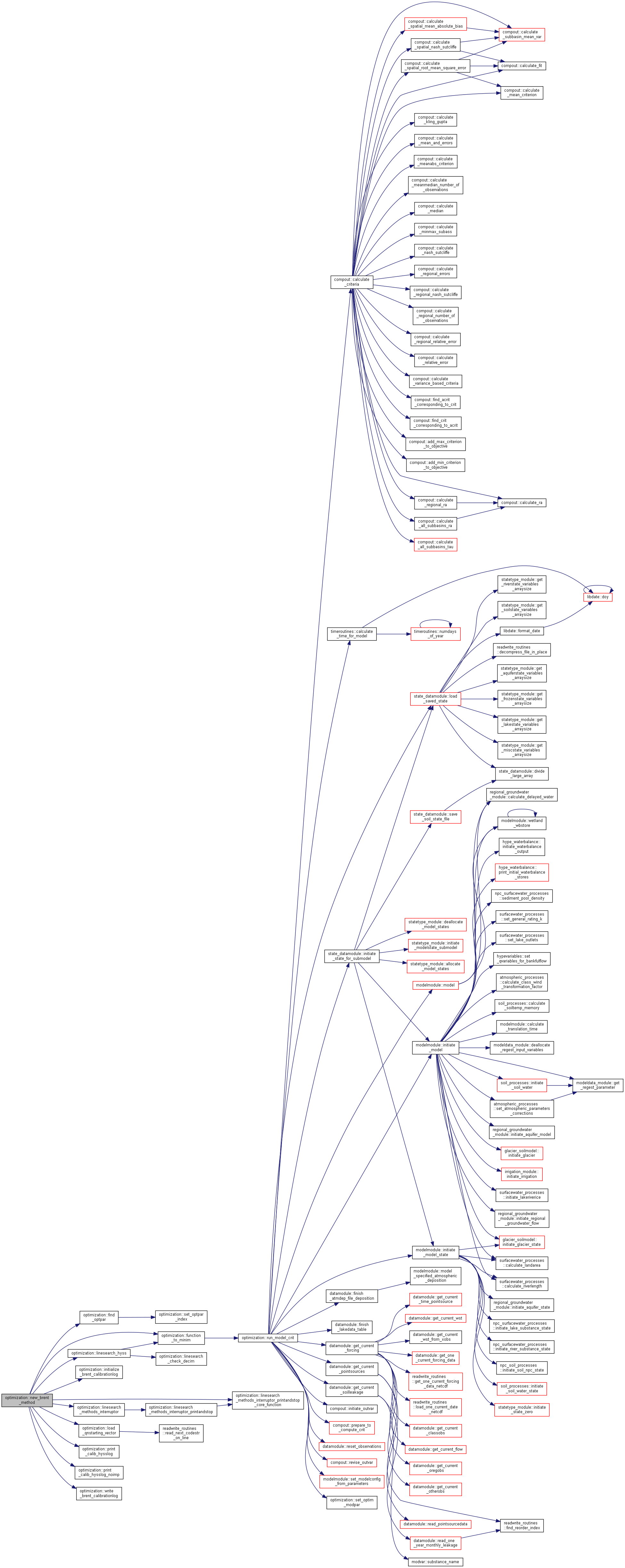

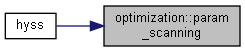

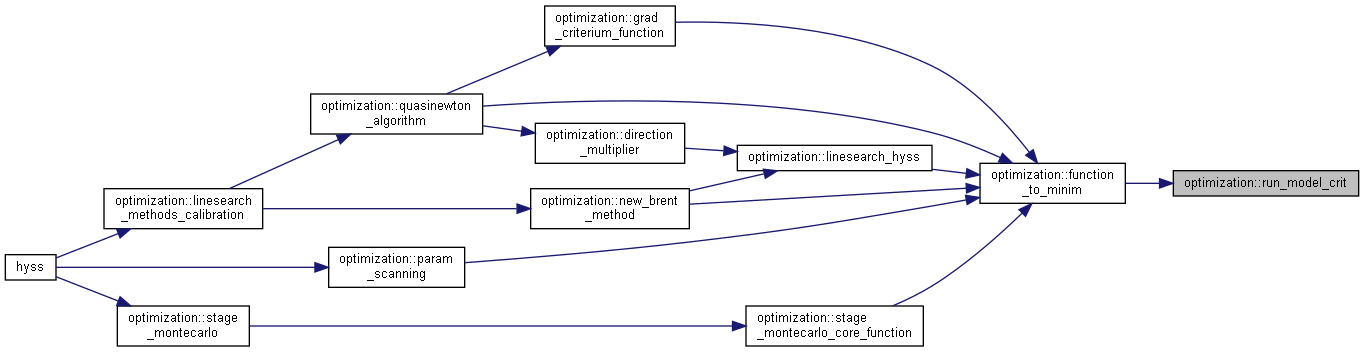

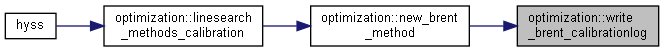

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ linesearch_methods_interruptor()

| subroutine optimization::linesearch_methods_interruptor | ( | integer, intent(in) | iterCounter, |

| real, intent(in) | critLast, | ||

| real, dimension(optim%cal_improvcrititer), intent(inout) | critLastVect, | ||

| real, dimension(numoptimpar), intent(in) | parLast, | ||

| real, dimension(optim%cal_improvparamiter, numoptimpar), intent(inout) | parLastTable, | ||

| real, dimension(numoptimpar), intent(in) | parPrecision, | ||

| logical, intent(out) | finished | ||

| ) |

Establish statistics on the latest calibration iterations to check for criteria and parameter improvements It also aborts calibration if method timed-out, or max amount of calibration iterations is reached.

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ linesearch_methods_interruptor_printandstop()

| subroutine optimization::linesearch_methods_interruptor_printandstop | ( | integer, intent(in) | stopFlag, |

| logical, intent(out) | finished | ||

| ) |

Print the total calibration time and the amount of function calls before ending calibration.

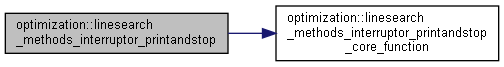

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ linesearch_methods_interruptor_printandstop_core_function()

| subroutine optimization::linesearch_methods_interruptor_printandstop_core_function | ( | integer, intent(in) | stopFlag, |

| integer, intent(in) | fileunit | ||

| ) |

Print the total calibration time and the amount of function calls before stopping.

Here is the caller graph for this function:

Here is the caller graph for this function:◆ load_qnstarting_vector()

| subroutine optimization::load_qnstarting_vector | ( | real, dimension(numoptimpar), intent(in) | parMin, |

| real, dimension(numoptimpar), intent(in) | parMax, | ||

| real, dimension(numoptimpar), intent(out) | par | ||

| ) |

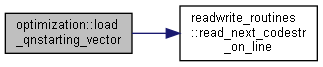

This subroutine loads the starting set of parameter values for quasi-Newton optimization. Note: parameter order according to optpar.txt required (not checked) and only the parameters with (larger than zero) intervals. It checks if given parameter values are within optimization interval boundaries.

- Parameters

-

[in] parmin Optimization interval lower boundary [in] parmax Optimization interval upper boundary [out] par Vector with the optimization parameters

Here is the call graph for this function:

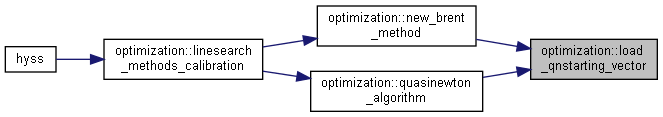

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ montecarlo_simulation()

| subroutine, public optimization::montecarlo_simulation | ( | character(len=*), intent(in) | dir, |

| logical, intent(in) | writeall, | ||

| type(snowicestatetype), intent(inout) | frozenstate, | ||

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate, | ||

| integer, intent(out) | npar | ||

| ) |

Simple MonteCarlo simulation with random parameter values.

- Parameters

-

[in] dir File directory [in] writeall Write result to file [in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states [out] npar Number of parameters to be changed

Algorithm

Initiate MonteCarlo simulation; find parameters and intervals for them to use

Simulate model num_mc times, generating new random parameter values for each simulation

Meanwhile keep track of the num_ens best simulations for final output

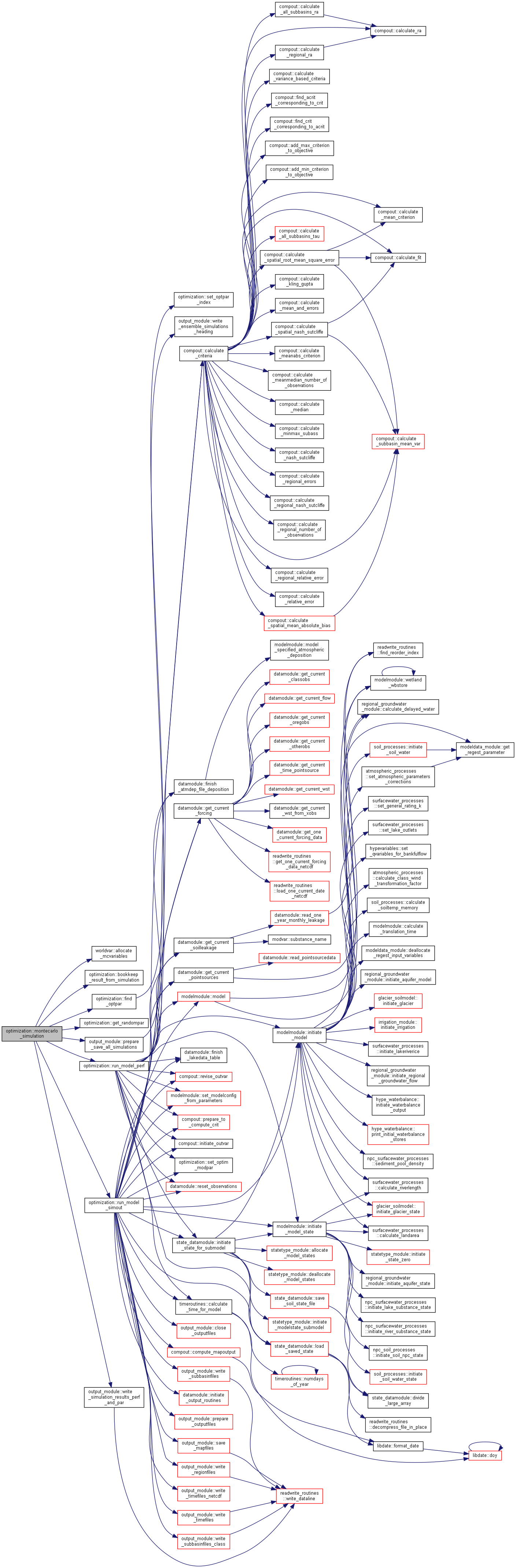

Here is the call graph for this function:

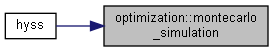

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ new_brent_method()

| subroutine optimization::new_brent_method | ( | real, dimension(optim%cal_improvcrititer), intent(inout) | critLastVect, |

| real, dimension(optim%cal_improvparamiter, numoptimpar), intent(inout) | parLastTable, | ||

| type(snowicestatetype), intent(inout) | frozenstate, | ||

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate | ||

| ) |

Brent algorithm for dimension-wise optimisation.

- Parameters

-

[in,out] critlastvect criterion saved for last iterations [in,out] parlasttable parameters saved for last iterations [in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states

Algorithm

Initiate Brent simulation; find parameters and optimization intervals, and read the starting set

Run simulation for the starting set of parameters

Repeatedly perform Brent steps, as long as max criteria improves more then tolerance between 2 iterations, and as long as max amount of iterations is not reached

- Perform line search, parameter-wise

- Perform diagonal step at Brent iteration end if required

- Check if iterations are enough and to be stopped

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ param_scanning()

| subroutine, public optimization::param_scanning | ( | type(snowicestatetype), intent(inout) | frozenstate, |

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate | ||

| ) |

This routine evaluates the criteria for systematic variation of two parameters, with two constant parameter stepsizes.

- Parameters

-

[in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states

Algorithm

Check number parameters is ok, and make sure results will be output to "calibration.log"

Initiate MonteCarlo simulation; find parameters and intervals for them to use

Calculate the step in each direction for gridded coverage of parameter space

Run simulation for each parameter value in grid

Write result to calibration.log

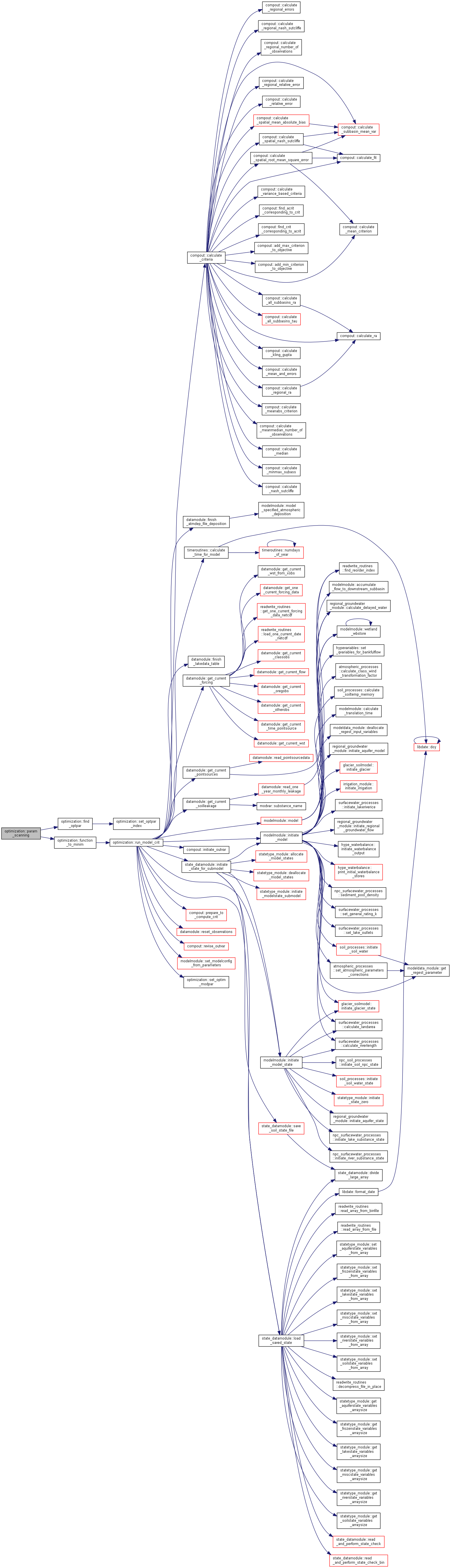

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ print_calib_hysslog()

| subroutine optimization::print_calib_hysslog | ( | integer, intent(in) | iterCounter, |

| real, dimension(numoptimpar), intent(in) | param, | ||

| real, intent(in) | critVal, | ||

| real, intent(in) | gradNorm | ||

| ) |

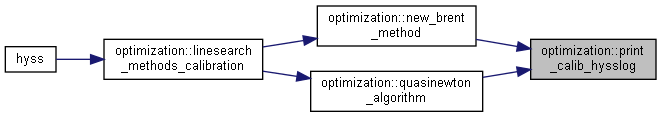

Here is the caller graph for this function:

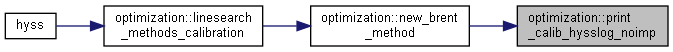

Here is the caller graph for this function:◆ print_calib_hysslog_noimp()

| subroutine optimization::print_calib_hysslog_noimp | ( | integer, intent(in) | iterCounter, |

| real, dimension(numoptimpar), intent(in) | param, | ||

| real, intent(in) | critVal | ||

| ) |

Here is the caller graph for this function:

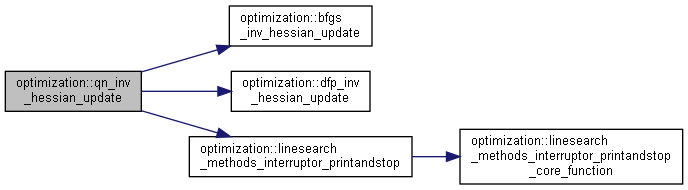

Here is the caller graph for this function:◆ qn_inv_hessian_update()

| subroutine optimization::qn_inv_hessian_update | ( | real, dimension(numoptimpar), intent(in) | deltaVector, |

| real, dimension(numoptimpar), intent(in) | deltaGrad, | ||

| real, dimension(numoptimpar, numoptimpar), intent(in) | invHessian, | ||

| real, dimension(numoptimpar, numoptimpar), intent(out) | invHessianNew, | ||

| logical, intent(out) | finished | ||

| ) |

This subroutine computes the update of the inverse Hessian matrix H for quasi-Newton methods as well as the steepest descent method.

Very clear math. details on both DFP and BFGS update formula (as well as on the quasi-Newton method in general) can be found in: "Numerical optimization" (second edition), J. Nocedal, S.J. Wright, Springer series in operational research, Springer 2006, chapter 6.1 (p. 135-143)

DFP: H_{k+1} = H_k + (s_k * s_k^T)/(y^T * s_k) - (H_k * y_k * y_k^T * H_k)/(y_k^T * H_k * y_k) Eq.(6.15) p. 139 in reference = H_k + (s_k * s_k^T)/denom - (H_k * y_k * y_k^T * H_k)/(y_k^T * H_k * y_k)

BFGS: H_{k+1} = (s_k * s_k^T)/(y^T * s_k) + [I - (s_k * y_k^T)/(y_k^T * s_k)] * H_k * [I - (y_k * s_k^T)/(y_k^T * s_k)] Eq.(6.17) p. 140 in reference = (s_k * s_k^T)/denom + [I - (s_k * y_k^T)/denom] * H_k * [I - (y_k * s_k^T)/denom]

where s_k resp. y_k [column vectors!] are the differences in parameters (deltaVector) resp. gradient (deltaGrad) between step k and k+1, denom = y^T * s_k, and H_k = inverse Hessian at step K Notation: vectors are assumed column vectors; all matrix/vector multiplications to be performed left-right

Implementing quasi-Newton methods in this way, the steepest descent method turns out to be just a particular case of quasi-Newton method, where the inverse Hessian is always kept as a unit matrix. Easy like on a Sunday morning :)

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

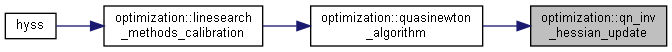

Here is the caller graph for this function:◆ quasinewton_algorithm()

| subroutine optimization::quasinewton_algorithm | ( | real, dimension(optim%cal_improvcrititer), intent(inout) | critLastVect, |

| real, dimension(optim%cal_improvparamiter, numoptimpar), intent(inout) | parLastTable, | ||

| type(snowicestatetype), intent(inout) | frozenstate, | ||

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate | ||

| ) |

Quasi-Newton DFP or BFGS algorithms for gradient-based minimum search.

- Parameters

-

[in,out] critlastvect criterion saved for last iterations [in,out] parlasttable parameters saved for last iterations [in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states

Algorithm

Check numerical parameters.

Get the optimisation interval boundaries and the starting parameter set

Initiate quasi-Newton: evaluate function and gradient at current guess, and initialize inverse Hessian as unit matrix

Repeatedly perform quasi-Newton steps, as long as the numerical gradient norm is larger than the given threshold and the maximum allowed number of iterations is not exceeded

- Determine a new parameter set, by linear search of minimum in the direction between current and new best parameter set

- Compute the new numerical gradient of the gradient function

- Calculate the new inverse Hessian

- Update all three quantities: current vector, gradient, and inverse Hessian

- Check if iterations are enough and to be stopped

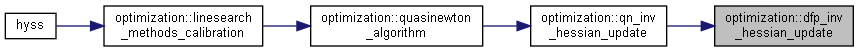

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ reduce_parameter_space()

|

private |

Determine new bounds for the parameter space based on the best simulations sofar.

- Parameters

-

[in] mpar Dimension of parameters [in,out] parmin Parameter interval lower limit [in,out] parmax Parameter interval upper limit [in] npar Actual number of parameters

Here is the caller graph for this function:

Here is the caller graph for this function:◆ run_model_crit()

| subroutine optimization::run_model_crit | ( | integer, intent(in) | npar, |

| integer, intent(in) | mpar, | ||

| real, dimension(mpar), intent(in) | par, | ||

| type(snowicestatetype), intent(inout) | frozenstate, | ||

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate, | ||

| real, intent(out) | criterion, | ||

| integer, intent(out) | status | ||

| ) |

Run a simulation of the model with parameters par. Calculate criterion.

- Parameters

-

[in] npar Number of parameters [in] mpar Maximum number of parameters [in] par Parameters [in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states [out] criterion Value of optimization criterion [out] status Subroutine error status

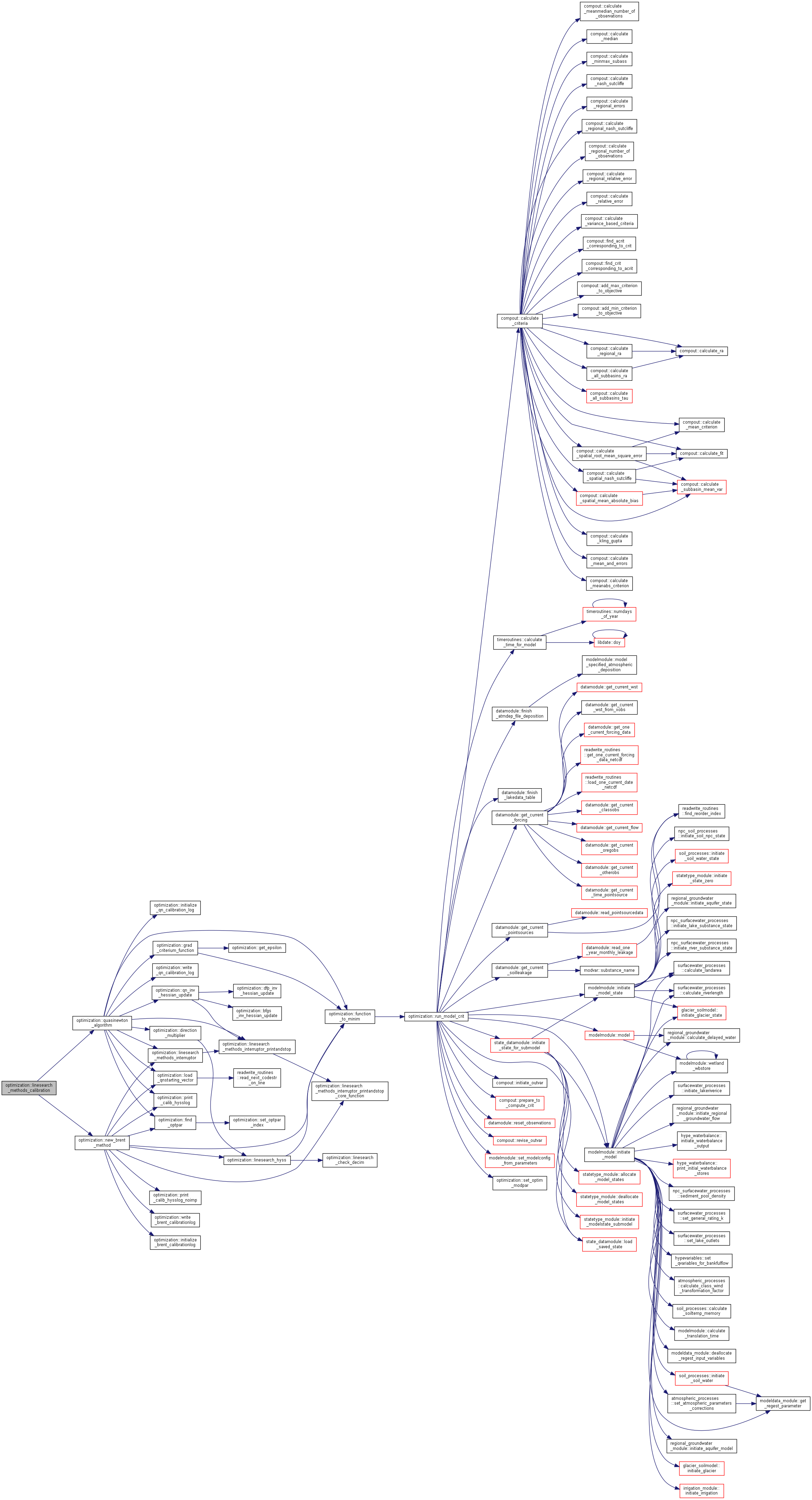

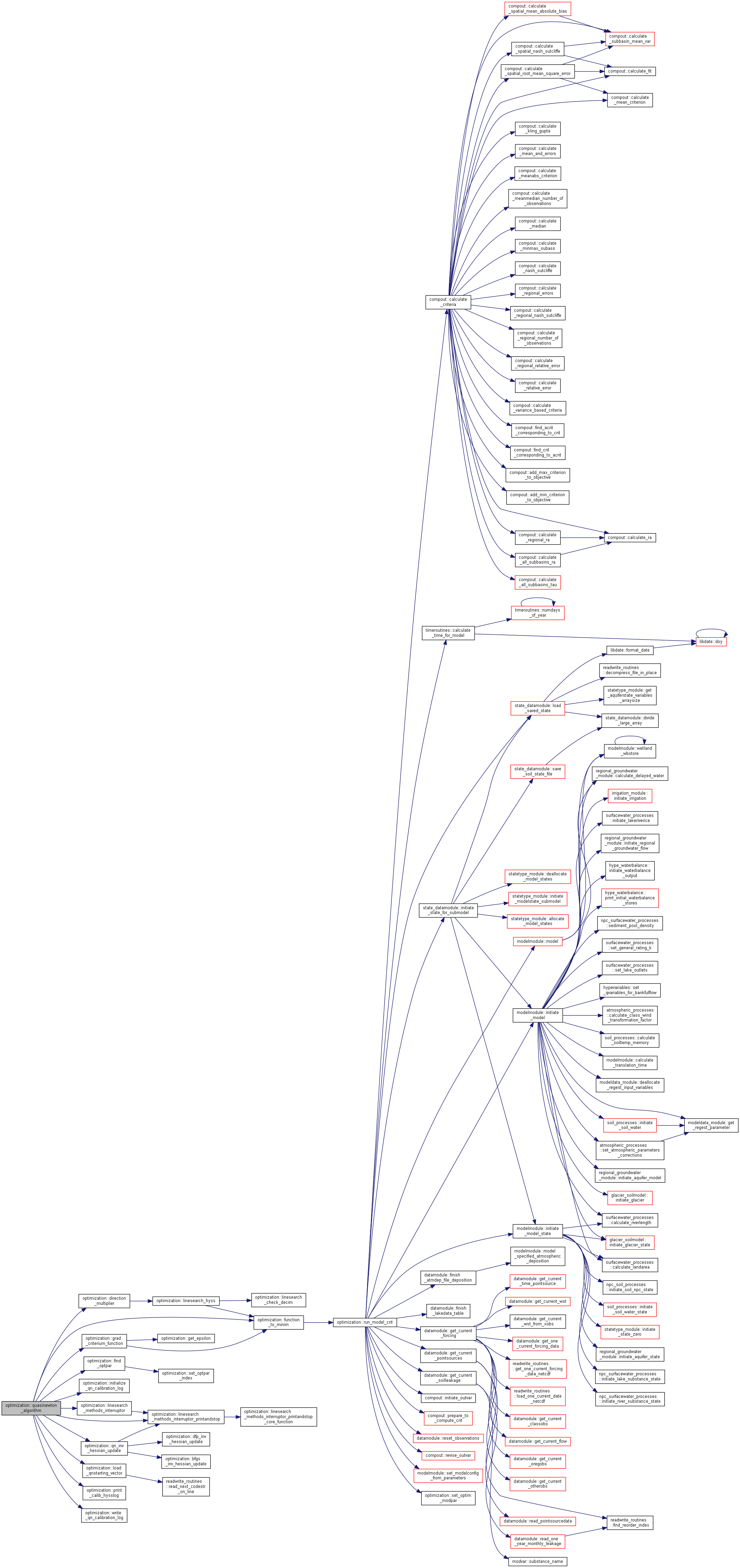

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ run_model_perf()

| subroutine optimization::run_model_perf | ( | integer, intent(in) | npar, |

| integer, intent(in) | mpar, | ||

| real, dimension(mpar), intent(in) | par, | ||

| type(snowicestatetype), intent(inout) | frozenstate, | ||

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate, | ||

| real, intent(out) | criterion, | ||

| real, dimension(maxperf,nacrit), intent(out) | performance, | ||

| integer, intent(out) | status, | ||

| real, intent(out), optional | condcrit, | ||

| real, intent(out), optional | condthres | ||

| ) |

Run a simulation of the model with parameters par. Calculate criterion and performance measures.

- Parameters

-

[in] npar Number of parameters [in] mpar Dimension of parameters-array [in] par Parameter values to be used for this simulation [in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states [out] criterion Value of optimization criterion [out] performance Simulation performance criteria [out] status Subroutine error status [out] condcrit conditional criterion value [out] condthres threshold of conditional criterion

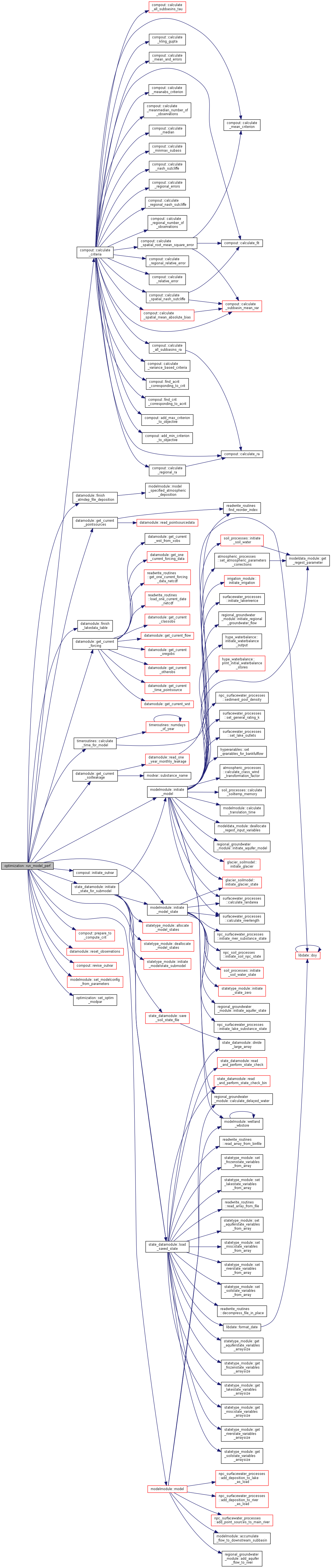

Here is the call graph for this function:

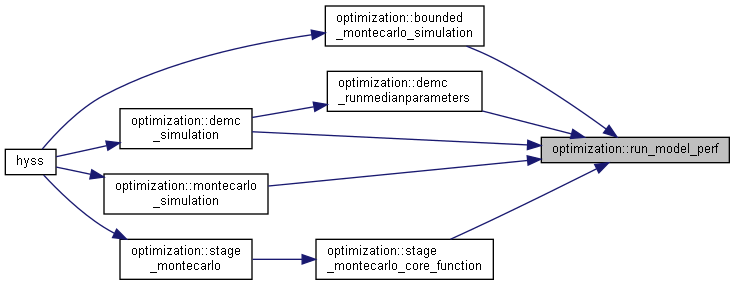

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ run_model_simout()

| subroutine optimization::run_model_simout | ( | integer, intent(in) | npar, |

| integer, intent(in) | mpar, | ||

| real, dimension(mpar), intent(in) | par, | ||

| integer, intent(in) | iens, | ||

| logical, intent(in) | runens, | ||

| logical, intent(in) | allens, | ||

| type(snowicestatetype), intent(inout) | frozenstate, | ||

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate, | ||

| real, intent(out) | criterion, | ||

| real, dimension(maxperf,nacrit), intent(out) | performance, | ||

| integer, intent(out) | status, | ||

| real, intent(out), optional | condcrit, | ||

| real, intent(out), optional | condthres | ||

| ) |

Run a simulation of the model with parameters par. Calculate criterion and performance measures Print out simulation results for all simulations.

- Parameters

-

[in] npar Number of parameters [in] mpar Dimension of parameters-array [in] par Parameter values to be used for this simulation [in] iens Current simulation [in] runens Flag for ensemble simulation [in] allens Flag for writing all ensemble results [in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states [out] criterion Value of optimization criterion [out] performance Simulation performance criteria [out] status Subroutine error status [out] condcrit conditional criterion value [out] condthres threshold of conditional criterion

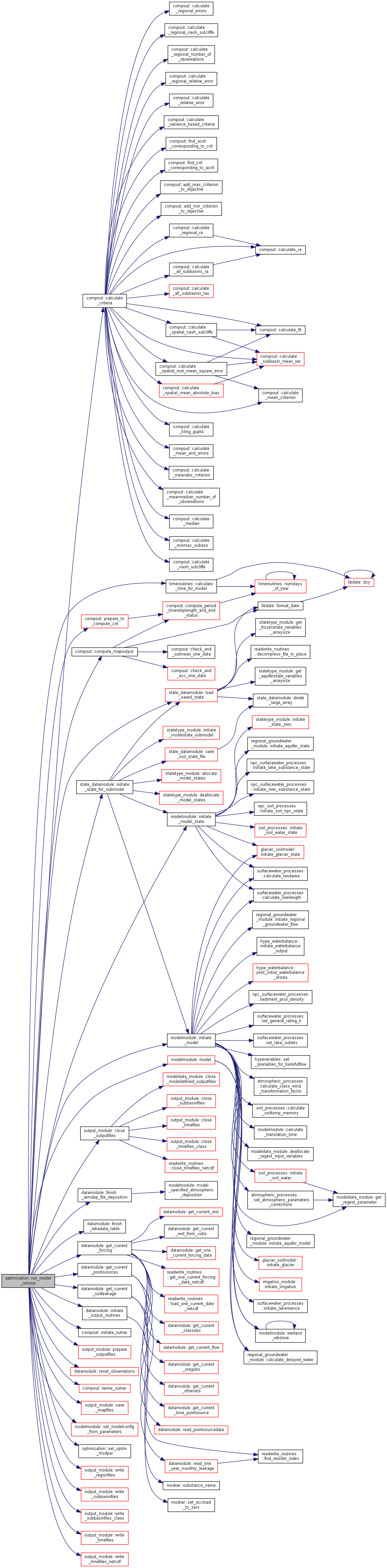

Here is the call graph for this function:

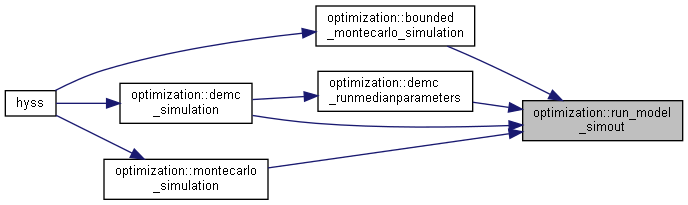

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ set_optim_modpar()

| subroutine, public optimization::set_optim_modpar | ( | integer, intent(in) | npar, |

| integer, intent(in) | dpar, | ||

| real, dimension(dpar), intent(in) | par | ||

| ) |

Set the parameter variables to the values to be used for simulation.

Consequences Module modvar variables soilpar, landpar, genpar, basinpar, regpar and monthpar may change.

- Parameters

-

[in] npar Actual number of parameters [in] dpar dimension of par [in] par Array with parameter values to be used for this run

Here is the caller graph for this function:

Here is the caller graph for this function:◆ set_optpar_index()

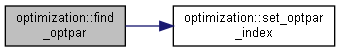

| subroutine, public optimization::set_optpar_index |

Find the parameters to be optimized and set the brent-routine parameter variables.

Consequences Module worldvar variable parindex is allocated and set

Algorithm

Allocate parindex

Find the parameters to be calibrated and assign information of their index

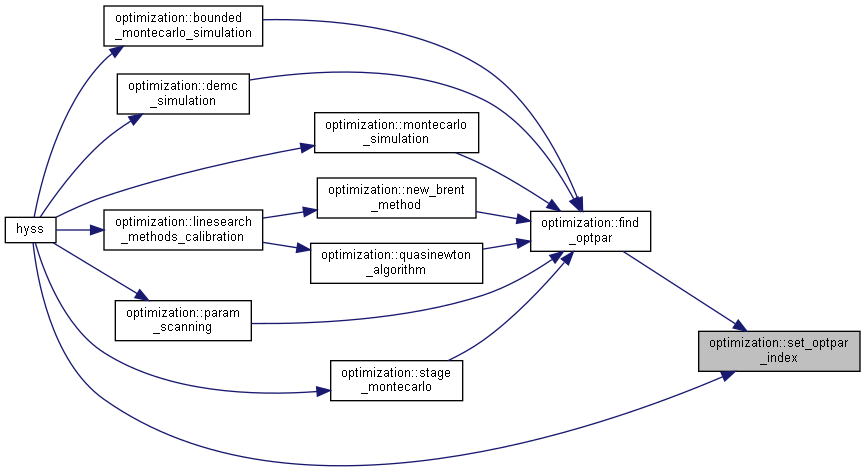

Here is the caller graph for this function:

Here is the caller graph for this function:◆ stage_montecarlo()

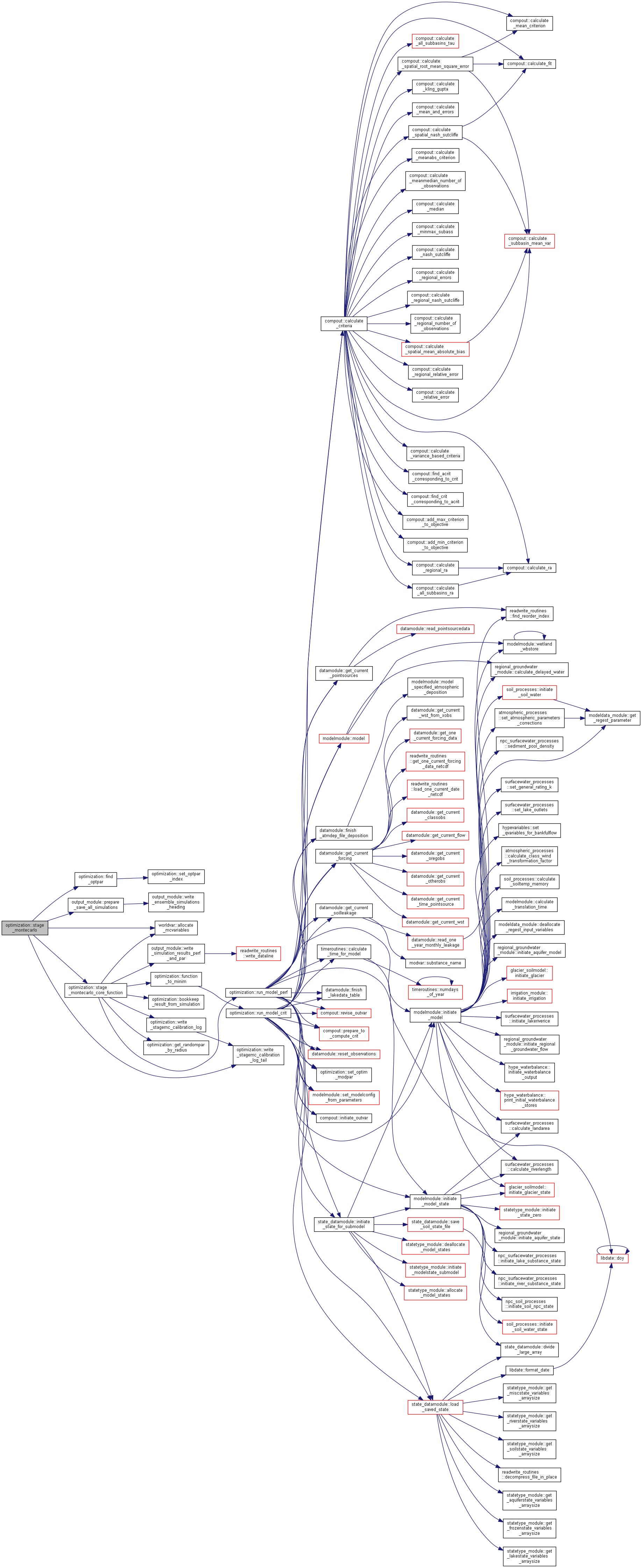

| subroutine, public optimization::stage_montecarlo | ( | type(snowicestatetype), intent(inout) | frozenstate, |

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate | ||

| ) |

MonteCarlo optimisation method, stage-wise zooming on top results This is the "outer" part of the function, core part is defined as separate subroutine (see next)

- Parameters

-

[in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states

Algorithm

First of all, check that the numerical zoom factor is not larger than 1 (otherwise the method will be extending the domain, not reducing it!)

Initiate MonteCarlo simulation; find parameters and intervals for them to use

Proceed with simulations, stage by stage, for each stage update the parameter space by reducing the interval for each parameter centered around the best points

Print progression in hyss.log

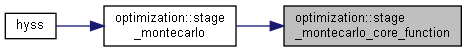

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ stage_montecarlo_core_function()

| subroutine optimization::stage_montecarlo_core_function | ( | logical, intent(in) | writeall, |

| integer, intent(in) | icenter, | ||

| integer, intent(in) | istage, | ||

| integer, intent(in) | nruns, | ||

| real, dimension(numoptimpar), intent(in) | parCenter, | ||

| real, dimension(numoptimpar), intent(in) | parRadStage, | ||

| real, dimension(numoptimpar), intent(in) | parMin, | ||

| real, dimension(numoptimpar), intent(in) | parMax, | ||

| type(snowicestatetype), intent(inout) | frozenstate, | ||

| type(soilstatetype), intent(inout) | soilstate, | ||

| type(aquiferstatetype), intent(inout) | aquiferstate, | ||

| type(riverstatetype), intent(inout) | riverstate, | ||

| type(lakestatetype), intent(inout) | lakestate, | ||

| type(miscstatetype), intent(inout) | miscstate | ||

| ) |

Core of loop in "stage_MonteCarlo" subroutine.

- Parameters

-

[in] writeall flag for write all result to file [in] icenter current center point [in] istage current stage, runs from 1 to optimnruns_stages [in] nruns amount of MC runs for the current stage [in] parcenter Current param space center within param space for random param generation [in] parradstage Current param space radii within param space for random param generation [in] parmin Absolute param space minimum boundary [in] parmax Absolute param space maximum boundary [in,out] frozenstate Snow and ice states [in,out] soilstate Soil states [in,out] aquiferstate Aquifer states [in,out] riverstate River states [in,out] lakestate Lake states [in,out] miscstate Misc states

Algorithm

Run a MonteCarlo simulation; nn simulations, generating new random parameter values for each simulation

Meanwhile keep track of the num_ens best simulations for next stage

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ write_brent_calibrationlog()

| subroutine optimization::write_brent_calibrationlog | ( | real, dimension(numoptimpar), intent(in) | par, |

| real, dimension(numoptimpar), intent(in) | parStep, | ||

| real, dimension(numoptimpar), intent(in) | direction, | ||

| real, dimension(numoptimpar), intent(in) | newPar, | ||

| real, intent(in) | lambdaBest, | ||

| real, intent(in) | critBest | ||

| ) |

Here is the caller graph for this function:

Here is the caller graph for this function:◆ write_demc_calibration_log()

| subroutine optimization::write_demc_calibration_log | ( | integer, intent(in) | genCounter, |

| integer, intent(in) | popCounter | ||

| ) |

Write progress of DEMC simulation to Calibration.log.

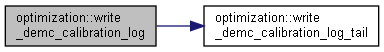

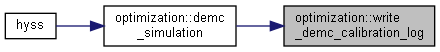

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ write_demc_calibration_log_tail()

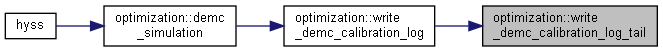

| subroutine optimization::write_demc_calibration_log_tail | ( | integer, intent(in) | funit | ) |

- Parameters

-

[in] funit file unit of calibration.log

Here is the caller graph for this function:

Here is the caller graph for this function:◆ write_qn_calibration_log()

| subroutine optimization::write_qn_calibration_log | ( | real, dimension(numoptimpar), intent(in) | par, |

| real, dimension(numoptimpar), intent(in) | gradVect, | ||

| real, intent(in) | gradNorm, | ||

| real, dimension(numoptimpar,numoptimpar), intent(in) | invHessian, | ||

| real, dimension(numoptimpar), intent(in) | direction, | ||

| real, intent(in) | lambdaBest, | ||

| real, intent(in) | critBest, | ||

| integer, intent(in) | iteratCounter | ||

| ) |

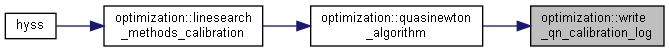

Here is the caller graph for this function:

Here is the caller graph for this function:◆ write_stagemc_calibration_log()

| subroutine optimization::write_stagemc_calibration_log | ( | integer, intent(in) | runCounter, |

| integer, intent(in) | centerCounter, | ||

| integer, intent(in) | stageCounter | ||

| ) |

Here is the call graph for this function:

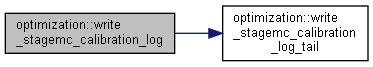

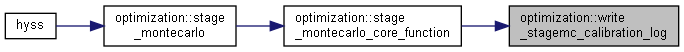

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ write_stagemc_calibration_log_tail()

| subroutine optimization::write_stagemc_calibration_log_tail | ( | integer, intent(in) | funit | ) |

- Parameters

-

[in] funit file unit of calibration.log

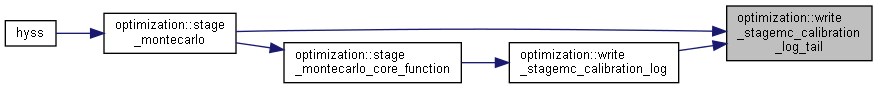

Here is the caller graph for this function:

Here is the caller graph for this function: